As AI driven attacks blur the lines between truth and deception, will your identity be the greatest casualty?

Picture this: attackers using AI to learn, adapt, and strike with unerring precision. AI-driven attacks harness the power of intelligent algorithms to outsmart traditional defenses. They include the use of polymorphic phishing and deepfake videos to create irresistible traps, putting your digital life on the line.

On a busy January morning in 2024, an employee at British engineering firm Arup received an urgent video call. It was the CFO and several high-level staff, asking for quick action on a merger. Convinced he was following orders, the employee authorized 15 transfers totaling $25 million to various bank accounts. But the devastating truth emerged a week later: Every single person in that video call (except for the employee) was an AI-generated deepfake.

Meanwhile, nearly 90 Tik Tok content creators used deepfakes of Latino journalists to spread misinformation to Spanish-speaking audiences in August 2025. The videos sowed fear, confusion, and distrust.

What once felt like a fringe possibility – AI enabled cybercrime - is now mainstream.

Below, we explore AI driven phishing attacks and how they exploit our trust in the voices of authoritative sources.

The AI phishing trap: What makes polymorphic phishing so hard to spot?

Phishing isn’t new.

Remember the Nigerian Prince scam emails of yesteryear?

You can spot them a mile away.

But the Nigerian Prince is still alive and well, with the help of ChatGPT.

In 2023, Abnormal AI researchers uncovered more than 1,000 Nigerian Prince scam emails targeting organizations. With AI, those emails are no longer sloppy, error-ridden messages. They’re polished communications designed to entrap you.

This brings us to an important question, "Why do phishing emails generated by AI seem so real?”

Here’s how it’s done: First, scammers use AI to scrape your social media profiles. With intel on where you work, what your hobbies are, and who’s in your circle, they write emails that look like they’re from your spouse, relatives, or work colleagues.

And now, they have an additional ace up their sleeve: AI polymorphic phishing. In early 2025, KnowBe4 researchers found that at least one polymorphic feature was present in 76% of all phishing attacks.

Polymorphic emails don’t just swap a few words here and there. They adapt in real time, rewriting subject lines, randomizing sender display names, changing links, and tailoring content so precisely, you’re apt to trust the message.

It’s the dynamic nature of polymorphic emails that allows attackers to bypass traditional rule-based or signature-based detection tools.

So, what does this mean for you?

It means emails can alter their appearance based on how you respond. If you click on an email link but don’t enter your credentials, the AI will send you a convincing follow-up message to get you to act.

So, how do you fight back? Read on for five (5) easy ways to outsmart the scammers.

FBI warns Gmail users of sophisticated AI-driven phishing attacks

If there’s one opening line you’ve seen more times than you can count, it’s either “I hope this email finds you well” or “I hope this email finds you in good spirits.”

Lately, some experts have been flagging their use in AI generated email campaigns.

But here’s the truth: Not every email that starts that way is automatically an AI-driven phishing attack.

So how can you tell the difference? The FBI has sounded the alarm on this very question. Thanks to LLM (large language model) advances, scam emails don’t just sound correct – they sound professional, even friendly, with flawless grammar and wording that matches your local dialect or industry.

That’s why it’s important to remember: It’s not what’s written, it’s what they’re trying to get you to do.

So, this is how you protect yourself:

- Only download links or attachments that are requested or expected from known senders (if in doubt, confirm with the sender through another communication channel like a phone call or new email)

- Hover over every link to see its true destination

- Check the sender’s email address

- Never share sensitive info over email, even if it appears to come from someone in a position of authority

- Always verify with official channels before clicking or replying

And remember: Legitimate companies will never email or text you with a link to update your personal or payment info.

While AI driven phishing attacks are ensnaring thousands, there’s a darker threat using not just words, but faces and voices to deceive.

Deepfakes take the art of impersonation to new, disturbing levels, which begs the question: Shouldn’t making or sharing a deepfake be illegal?

The answer isn’t as clear-cut as you may hope.

Is deepfake AI illegal?

Here’s the bad news: Owning or using AI tools that are capable of creating deepfakes isn’t illegal.

Just ask Senator Amy Klobuchar, who was tried and found guilty in the court of public opinion for slander.

Thanks to deepfake technology, a video of her speech from a Senate Judiciary hearing on data privacy went viral. Except, it showed her saying completely outrageous, offensive things about Sydney Sweeney’s jeans ad. The fabrication was so convincing that even the Senator herself was shaken.

She heard her own voice speaking words she never said. Tik Tok took it down, and Meta flagged it as fake. But the only response from X was to add a community note.

It was a frustrating experience, which shows just how powerless we all are against AI lies.

That’s why Klobuchar is a leading advocate of the bipartisan No Fakes Act to hold platforms accountable and give victims the power to take down AI deepfakes. It builds on laws like the Take It Down Act passed last year, which criminalizes nonconsensual deepfake intimate imagery.

But here’s the catch: The Take It Down Act still carves out exceptions for satire, political commentary, or newsworthy content. So, creating and sharing a deepfake is permissible, provided it complies with legal requirements.

The takeaway is clear: Even a U.S. senator caught in a deepfake storm faces an uphill battle, as there’s no legal shield for reputations damaged by deepfake media.

AI-imagined personas: What is synthetic identity theft and how to detect it

How synthetic identities are the heart of AI-driven attacks

While deepfake fraud has reshaped the landscape of deception, synthetic or AI driven identity theft takes the dangers to uncharted waters. Imagine scammers having the ability to falsify not just voices or faces but also entire personas. This is synthetic identity fraud, where AI-imagined entities can open new credit lines, make convincing calls, and steal from you.

Synthetic identities are “ghost profiles” stitched together with stolen Social Security numbers, fabricated details, and AI-generated photos & recordings.

They “hide” in the background, gaining trust and building creditworthiness before vanishing with millions in ill-gotten gains. Meanwhile, you’re liable for the loss because your stolen Social Security number ties you to the fraud.

The risks don’t stop there. AI-powered vishing is emerging as a potent tool for creating these synthetic identities. With AI vishing, the scammers can impersonate authority figures to get you to “confirm” your Social Security number, which they’ll combine with other bits of information to create synthetic personas.

Recent research shows that people perceive an AI-voice to be the same as its real counterpart approximately 80% of the time. So, it’s no surprise that AI vishing scams have ensnared everyone from executives to grandparents.

In March of this year, 25 people were charged for participating in a "Grandparent Scam" that defrauded seniors out of more than $21 million dollars in 40+ states. The scammers spoofed caller IDs to make incoming calls appear as if they came from a grandchild. They also used AI to clone the grandchild’s voice.

Here are six (6) ways to protect against AI generated phone scams:

- Double check the source. If you get an urgent or emotionally charged call asking for money, don’t rely on Caller ID alone to help you verify the call. Remember: Even caller IDs can be spoofed. Your best bet is to hang up and call the person directly.

- Pause before you react. Scammers hope you’re less likely to notice discrepancies in a scam call. They’ll capitalize on your discomfort to pressure you into acting quickly. So, you’ll want to remain calm and ask yourself: Does the message really sound like the person you know?

- Have a “safe word” for calls. Pick a unique “crisis” word only you and your family know. If the caller can’t reply with the right password, hang up and call your loved one directly.

- Trust your instincts. If you receive a suspicious voicemail, ask a friend or loved one for a second opinion, or check the Better Business Bureau site for info about known scams.

- Avoid answering calls from unfamiliar numbers. If you answer such a call accidentally, hang up. And this is important: Never answer any call with questions that can be answered with “yes.” With a recording of your voice, the caller can use your voice to confirm a major purchase, especially if they already have your credit card number.

- Limit where you post your voice online. AI needs very little audio to imitate a person’s voice. So, consider limiting audio recordings of your voice and be sure to set your social media profiles to private.

Beyond mimicking faces and voices, AI is also quietly mastering another art: predicting what passwords you’ll choose next. The question is, how?

Is your digital footprint making it easier for AI to crack your passwords?

For decades, attackers relied on brute-force attacks, which tested every possible character combination.

But it was a painfully slow process.

Today, AI password cracking tools have changed the game completely.

Combined with ultra-fast GPUs, they can process billions of guesses in seconds, making them thousands of times more efficient than brute force tactics.

PassGPT, for instance, can guess 20% more passwords than state-of-the-art GAN models. Here’s how: by studying the digital footprint you leave behind daily.

AI models trained on millions of user-generated credentials learn human tendencies, while GPUs provide massive parallel processing, allowing millions of guesses per second.

So, could your habits be handing the keys of your accounts over to scammers?

What happens when machines learn your password habits?

Research shows that AI password cracking tools can crack over 50% of common user-generated passwords within minutes, including many that meet complexity rules.

For example, AI models know that many users replace “a” with “@” and add “123” to their passwords.

Some of the most advanced tools use hybrid approaches, blending AI with dictionary and brute force techniques. They refine their guesses in real time based on feedback. This ability to learn from patterns is what sets AI password cracking tools apart.

Add AI’s ability to learn from keystroke dynamics and typing patterns, and the risk deepens.

So, the question isn’t whether your password is strong enough, it’s how much AI already knows about your behavior, preferences, and habits without you realizing it.

The implications are ominous: If machines understand how you create passwords, should you even use passwords?

Fighting fire with fire: AI versus AI in cyber defense

What is phishing detection?

So, you may be thinking, with the risk of phishing scams, should you toss out your passwords?

Here's the truth: Passkeys, with their robust security and ability to deliver faster logins, are the future. They’re built to stop phishing by ensuring your login is cryptographically bound to the site you’re on.

BUT passkeys aren’t yet universal.

In fact, passwords are still your first line of defense.

Before we talk about passwords, let’s clear up something important: What is AI phishing detection and why should you care?

In short, AI phishing detection is like having round-the-clock defense for your inbox. It uses advanced machine learning and pattern recognition to identify behavior that doesn’t fit with your usual email traffic. Its ability to constantly learn and adapt can help stop attacks before they reach you.

Let’s take a look at some of the best phishing detection software on the market for both consumers and businesses.

|

AI phishing detection software |

Key features |

Ideal for |

|

Consumers and businesses seeking all-in-one protection across devices | |

|

Individuals and small businesses looking for proactive scam detection | |

|

Graphus AI phishing defense |

|

Businesses needing scalable enterprise-ready AI phishing defense |

|

For security-conscious organizations using platforms like Microsoft 365, Google Workspace, and Exchange Online

| |

|

Businesses of all sizes looking for scalable, cloud-based email security and user awareness solutions | |

|

Businesses needing comprehensive email security and data protection for regulatory compliance | |

|

Barracuda Networks |

|

Small to large businesses seeking affordable all-in-one email security solutions with strong spam and AI phishing detection

|

|

Larger organizations requiring integrated cloud email security with broad threat protection capabilities |

While the above overview shares the best phishing detection software for spotting AI driven attacks, it’s important to research your options before choosing any product.

Consulting with security experts, reading independent reviews, and reviewing product demos can assist in making a decision that fits your unique needs.

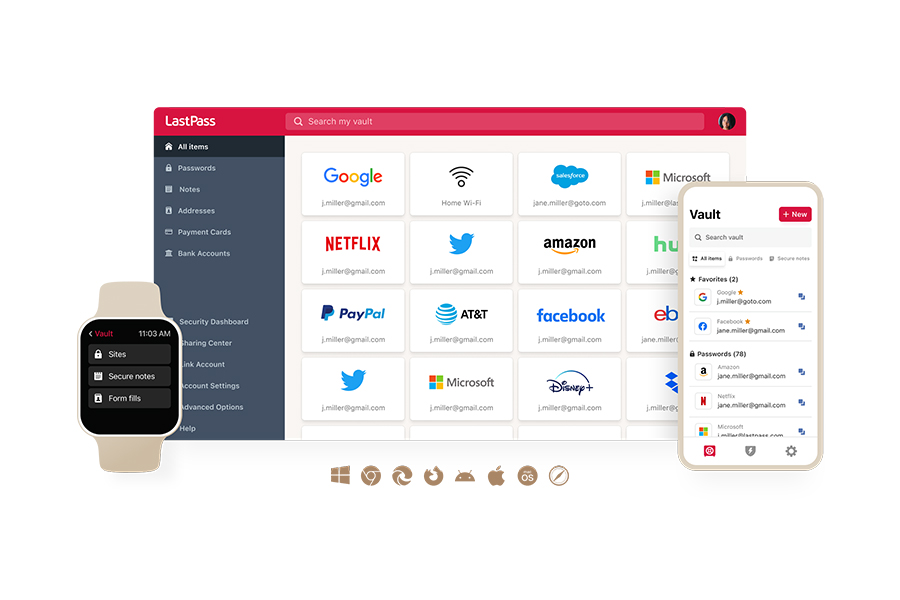

That said, the shield doesn’t stop at phishing detection software. LastPass can serve as your ally against AI-driven credential based attacks, with features built for the threats you face.

Passwords 2.0: How LastPass keeps you safe

Let's face it: Even the best detection software can’t protect your passwords, the golden keys to your digital identity.

That’s where an award-winning, Secure Access provider like LastPass comes in. With LastPass, you get:

- Easy, secure password creation, which lets you customize our built-in password generator to align with the latest NIST password guidelines. This means you can create strong passwords without breaking a sweat. Best of all, cracking a truly random 16-character password remains computationally expensive and impractical, even with a supercharged GPU setup.

- AES-256 encryption, which means you get the most trusted cryptographic standard known to the military, governments, and security experts worldwide.

- Phishing-resistant FIDO2 MFA, which blocks AI driven phishing attempts or bot logins. With options like passkeys and hardware security keys, this means ironclad security for both your personal and business logins, significantly reducing your risk of AI enabled identity theft.

- Smart autofill, which enters your information on verified sites only and after explicit approval from you. This blocks AI driven phishing sites from harvesting your credentials, keeping you and your business safe from credential based attacks.

- Encrypted URLs, which means every URL is stored in an encrypted state. Even if attackers break in, they’ll still need to crack the ciphertext to identify the login credentials associated with every stored URL. This means your accounts stay safe from unauthorized access.

- 24/7 Dark Web Monitoring, which scans for leaked credentials on the Dark Web. Early detection means you can act quickly to update your passwords and protect your personal and business data from exploitation.

Together, phishing detection software and LastPass form a robust defense against AI phishing attacks, keeping the most important asset – your digital identity – safe.

I’ve been using LastPass for a while now, and it’s become one of the tools I rely on daily. It makes managing passwords effortless — no more struggling to remember dozens of logins or reusing the same weak password everywhere. I especially like how seamless it is across devices: whether I’m on my phone or computer, everything syncs smoothly. The auto-fill feature is fast and accurate, and the built-in password generator means I can always use strong, unique passwords without hassle (Joel G, operations manager at small business, verified G2 reviewer).

As the owner of a small business, I have lots of suppliers and customers sites available quickly to me, and Last Pass works seamlessly across all my devices (Nikki C, small business owner, verified G2 reviewer).

To secure your digital identity and enjoy greater peace of mind, get your LastPass free trial today (no credit card required).

|

Type of account |

Who it’s for |

Free trial? |

|

Premium |

For personal use across devices |

Yes, get it here |

|

Families |

For parents, kids, roommates, friends, and whoever else you call family (6 Premium accounts) |

Yes, get it here |

|

Teams |

For your small business or startup |

Yes, get it here |

|

Business |

For small or medium-sized businesses |

Yes, get it here |

|

Business Max |

Advanced protection and secure access for any business |

Yes, get it here |

Sources:

- https://www.cnn.com/2024/05/16/tech/arup-deepfake-scam-loss-hong-kong-intl-hnk

- https://www.fcc.gov/consumers/guides/stop-unwanted-robocalls-and-texts

- https://www.nbcnews.com/news/latino/fake-ai-videos-spanish-language-journalists-misinformation-rcna225201

- https://abnormal.ai/blog/generative-ai-nigerian-prince-scams

- https://www.startupdefense.io/cyberattacks/polymorphic-phishing

- https://www.securityweek.com/ai-powered-polymorphic-phishing-is-changing-the-threat-landscape/

- https://salazar.house.gov/media/press-releases/congresswoman-salazar-introduces-no-fakes-act-0

- https://www.klobuchar.senate.gov/public/index.cfm/2025/5/klobuchar-s-bipartisan-take-it-down-act-signed-into-law

- https://www.privacy.com/blog/vishing-scam

- https://arxiv.org/abs/2410.03791

- https://www.fcc.gov/consumers/scam-alert/grandparent-scams-get-more-sophisticated

- https://www.ky3.com/2025/09/02/your-side-better-business-bureau-warns-grandparent-scams-defiance-mo-man-implicated-international-scheme/

- https://www.experian.com/blogs/ask-experian/what-is-say-yes-scam/

- https://www.phishprotection.com/phishing-awareness/top-10-best-anti-phishing-solutions-to-protect-your-business-in-2025

- https://support.avast.com/en-en/article/scam-guardian-faqs/#pc